The Bryan Johnson Approach to Eng Productivity

I recently watched Netflix’s Bryan Johnson documentary (Netflix Link) and came away both intrigued and amused by his extreme quest to maximize health and minimize biological age.

It’s a story that rubs some people the wrong way: there’s a natural pushback when someone tries to defeat a universal part of the human condition (i.e., aging). And, as scientists in the documentary noted, one individual’s relentless n-of-1 experimentation doesn’t immediately revolutionize science. But if you look beyond his pursuit of “don’t die,” Johnson strikes me as someone who:

Tries to be kind and positive. (Example of him being kind in the face of a Twitter troll).

Shares what he learns but doesn’t shove it down anyone’s throat.

In contrast, I hate reading headlines of founders bragging about how many raw hours they make their teams work. In many cases, when you dig into it, these teams are pitifully productive for their time burned. It’s unhealthy and a narrative that I hate to see perpetuated.

Bryan Johnson opens up about the same topic in the documentary. When reflecting on his grinding work ethic at Braintree, he talks about how the unhealthy culture led him to dark depression and suicidal thoughts:

I do not fear death. I sat at its doorstep for a decade alongside chronic depression. Desperately wishing I didn’t exist. Had it not been for my three children, I probably would have taken my life.

https://x.com/bryan_johnson/status/1683261029281861632

By this measure, I don’t find him offensive. If anything, I admire his curiosity and his advocacy for general health. The controversy arises when you take something as fundamental as aging and say, “Let’s see how far we can push this.” And yet, it also made me ask a fun question: What would the engineering equivalent be?

I spend a lot of time working on developer tools—things that measure productivity, help teams ship better code, and encourage best practices. So naturally, when I see Bryan Johnson pushing the limits of human health, I start to wonder: How far can we push the “health” of an engineering organization?

The (Sometimes Awkward) Parallels

In health, you’ve got the Hubermans and Bryan Johnsons of the world bringing attention to metrics, interventions, and ways to improve longevity. In software, many of us are doing the same for engineering velocity. It’s nowhere near as existential as not aging—but the desire to “ship fast” and find new productivity frontiers does mirror the relentless optimization we see in health circles.

There’s an obvious counter-critique to chasing engineering productivity: “Why are you obsessing over metrics and throughput? The point of software isn’t just to do more, it’s to build something valuable.” Fair question—just like people ask whether the obsession with living longer comes at the cost of living well. We see it in Bryan Johnson’s lifestyle: is the joy of daily life compromised by 100 pills and rigid routines?

For an engineering team, there’s a similar risk. If you over-optimize for raw productivity (lines of code, PR count, etc.), you might forget about the delight or user value that software should bring. So if you do decide to push the limits on engineering speed, it’s important to be clear about the purpose: it’s not to create an iron-fisted roadmap that everyone must follow, but to explore what’s possible and see what useful techniques can trickle out to the broader dev community.

Enjoy content about startups and dev-productivity? Consider following me to stay updated with reflections & insights while I build Graphite

Measuring the Extreme Edge

In practice, a quest for “the most productive engineering team” will produce tons of new questions:

What counts as productivity? Is it PRs per day, deployments per week, or something else entirely?

How do we account for team happiness, code quality, or ultimate business impact?

Is raw speed even the right goal?

I like the analogy to Bryan Johnson because he’s not claiming everyone should mimic him. He does the wacky stuff precisely because it might illuminate a path for new discoveries and knowledge. Similarly, an engineering team that pushes extreme optimization might glean patterns—maybe around code review cadence or PR sizes—that other teams can apply in moderation.

In my own work, analyzing tens of millions of pull requests has already revealed interesting data: the ideal median PR size for velocity, the sweet spot for CI duration, how certain repository sizes benefit from ordered merges, and so on. These patterns help guide how we build tools and talk about best practices. Our internal team has ended up in the top percentile of PRs-per-engineer metrics just by living these approaches day in and day out. But there’s still plenty more to learn, so I’m starting to measure productivity more systematically.

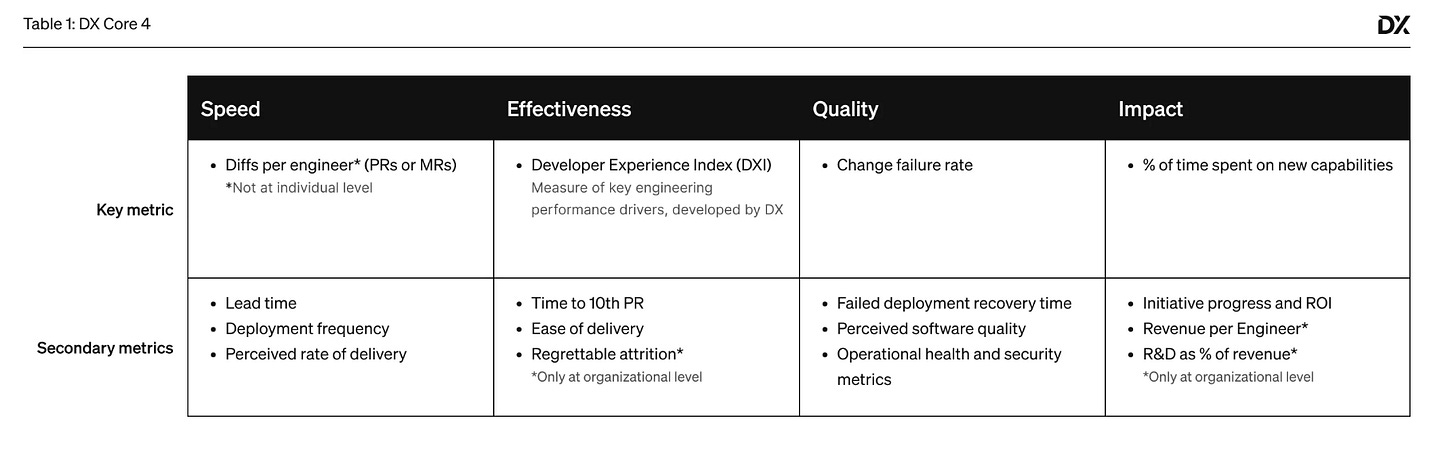

Take the “DX Core-4” from DX. Here’s a snapshot of how Graphite’s engineering team totaled up in Q4 2024:

Median diffs per engineer per day: 6

Developer experience index: (still to be measured)

Change failure rate: 1.05% (the probability that any single PR causes us to pause deployments)

Impact: 24% (percentage of merged PRs marked as “feat” in conventional commit style)

Even computing these numbers raised big questions: Which metrics are the best benchmarks? What’s “good” or “industry standard”? When does the quest for speed overshadow code sustainability? (Luckily, it’s easy to agree these are at least healthier measurements than hours-per-week worked.)

That’s the messy part of productivity research—every answer breeds new questions. But I do think there’s value in trying. Even if not everyone needs to be hyper-productive, a segment of teams pushing the envelope can surface best practices that benefit all. The same logic that justifies Bryan Johnson’s experimentation might justify an engineering team’s: it helps us learn, debate, and evolve.

Should anyone mimic these measures to the extreme? Probably not. Software teams exist to create value for the world; shipping speed is just a means to that end. Still, I believe in leaning into these experiments—and in sharing the results openly, the successes and the stumbles. If it helps even a fraction of teams discover ways to work more effectively (and maybe with fewer pains along the way), then the experiments are worth it.

If this post piques your curiosity—or makes you roll your eyes—great. We need the skeptics who say, “Why the obsession with shipping speed?” just as much as we need the folks who say, “What’s the absolute limit of code velocity?” Somewhere between these extremes lies a healthy middle ground, informed by the outer edges of experimentation. And if a little self-awareness can keep it from devolving into an engineering version of “don’t die,” I’ll count that as a success.