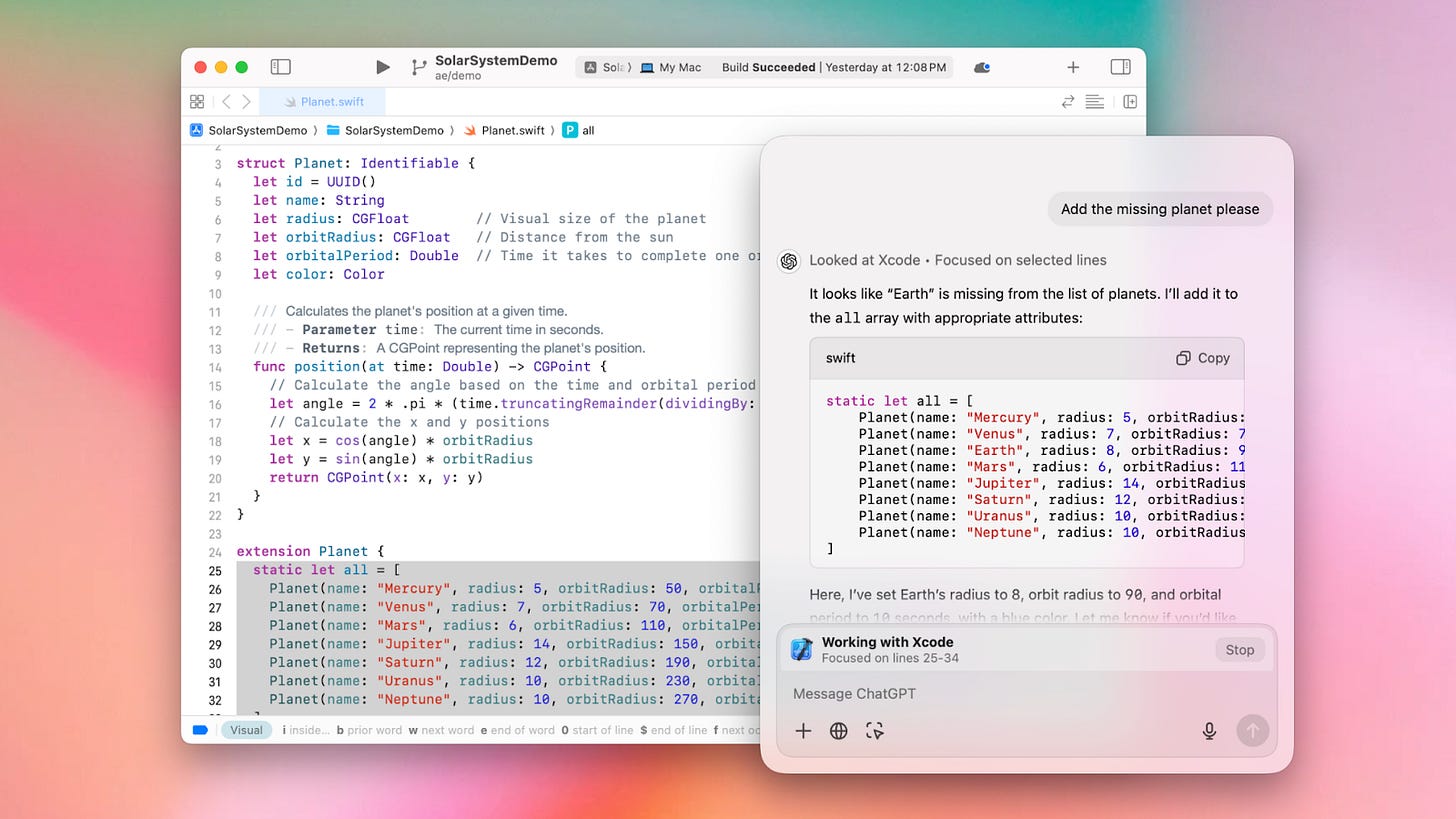

ChatGPT’s “Work With Apps”: Slowly deprecating code editors

On Thursday, OpenAI showcased improvements to ChatGPT’s “work with apps” functionality. Instead of limiting interactions to a chat window, ChatGPT can now read what’s currently visible on your screen, analyze the selection, and interact directly with other applications. This is achieved by pulling context through the standard accessibility API - an approach that not only enables rich, contextual interactions but also encourages developers to uphold accessibility best practices. As tools become more adept at tapping into these standard interfaces, there’s a stronger business incentive for companies to adhere to accessibility guidelines. The result could be a more inclusive digital ecosystem where every user, regardless of their abilities, benefits from advanced interactions.

Several apps are already lining up to integrate. Notion, Warp, and others are embracing this evolution. Older, more entrenched platforms - like Microsoft Office - may initially resist. But how long can they hold out when interoperability comes through a consistent, standardized API rather than a proprietary plugin? As momentum builds, reluctance will be harder to justify.

OpenAI’s team has hinted that this is just the beginning. Instead of users copying and pasting between windows, future iterations might integrate even more fluidly with your workflow. Consider what it means for knowledge work: Grammarly already suggests in-line edits and improvements without requiring us to hop between tools. Why shouldn’t our AI assistants do the same, not just for grammar or formatting, but for end-to-end creation, editing, and integration of all types of content, including code?

What Does This Mean for Development Tools?

Engineering, historically, is a text-based discipline. Sure, we have GUIs for configuring cloud services and dashboards for DNS management, but the trend has always swung back to “config-as-code.” We articulate our systems through text files - YAML configs, Terraform scripts, Dockerfiles - and store them in code repositories. As AI models become more capable, three distinct patterns are emerging:

1. Smarter Autocompletion in Existing Editors

Tools like VSCode, Cursor, and Windsurf offer contextual suggestions. This first wave is intelligence grafted onto the editor experience, making coding faster and less error-prone but still anchored in the traditional environment of text editing.

2. Headless Code Generation

A second trend is emerging where code changes skip the editor entirely. Tools like Google’s experimental Jules, Cognition’s Devin, and platforms like Factory.ai aim to commit meaningful code changes directly to repositories. This bypasses the local editor and introduces a more autonomous, AI-driven approach to code creation.

3. A Unified Interface Layer on Top of “Dumb” Editors

Now ChatGPT hints at a third path: an interface layer that can read and write to applications directly through something like an accessibility API. Instead of relying on editor-specific extensions, ChatGPT might present a universal interaction layer that orchestrates multiple tools. It could act as an intelligent “bridge” between you and a variety of applications - none of which need to be made “smart” themselves.

If we consider these paths, which is most likely to dominate? If the contest were only between the first two paradigms - smart autocompletion within editors versus headless code generation - headless would eventually win. Why? Because real engineering teams aren’t just pairs of programmers making small suggestions in one another’s IDEs. They’re networks of autonomous, capable individuals who propose high-level architectural changes, iterate on significant refactors, and collaborate through code review processes rather than line-by-line autocompletion. As soon as AI models become just “smart enough,” human-level interactions with code will transition from typing in editors to guiding AI agents to produce fully formed changes directly in repositories.

Rethinking the Interface of Collaboration

This raises an important question: where do we guide these AI agents? Before, it seemed natural that prompting would occur within GitHub’s interface (or any other system of record) - after all, GitHub is where we host, review, and merge code. It has maximum context and doesn’t need a sophisticated editor UI in an agentic world. But OpenAI’s demonstration suggests another possibility: a unified workspace like ChatGPT itself could become the place where prompts are given. Why browse GitHub when the AI interface can read from GitHub, write to it, and ultimately manage the entire creation?

Imagine prompting ChatGPT: “Create a new PR that adds feature X.” With deeper GitHub integration, ChatGPT could read codebase context, spin up a new branch, write the code, push it, and open a pull request - without ever leaving the ChatGPT window. The human developer’s job shifts from typing code to supervising AI-driven tasks, guiding architectural decisions, and ensuring strategic alignment. Copy-and-paste dissolves, replaced by a frictionless “everything” interface. GitHub becomes reduced to a system of record and pipes, and its custom-build interface begins melting.

A New Model of Creation

As these AI-driven interactions expand, we might see a gradual dissolution of traditional editor boundaries. Notion for docs, Figma for designs, and VSCode for code might still exist as data sources and specialized back-ends, but the creation interface may unify into a universal “front end” like ChatGPT. AI will act as the translator and integrator, pulling context from Notion pages, committing code changes directly into GitHub, or exporting design frames to a Figma file - all initiated by commands in a single interface.

In this scenario, the differentiated value of each platform becomes the mechanical systems they provide - CI/CD pipelines, merge queues, or high-performance data synchronization - rather than their role as primary user interfaces. The specialized apps remain essential for integration, storage, performance, and compliance, but the user’s day-to-day creation and editing could shift to a single, AI-driven surface.

Who Loses, Who Wins?

Traditional editor apps might risk becoming “headless back-ends” for AI agents. Instead of navigating multiple tools, users could use ChatGPT as the main interaction point. The winners will be pipes-based tools that provide value storing, moving, and computing data. The losers are the per-seat tools, those that pride themselves on niche, custom-built editing UIs.

Side note - accessibility pattern users also stand to be huge short-term winners. This may lead to the largest up-pri of screenreader API adoption ever seen.

Ultimately, the shift is toward a universal creation interface - an “everything-editor” - powered by AI. As intelligence becomes commoditized, convenience and frictionless integration matter most. Content, code, and data all flow through a unified channel. The future could be one where the lines between tool, assistant, and environment blur, and where the “app” you interact with is less important than the intelligence and integration that the app enables.